Original Research

Locks & LeaksRisk, resilience, and red teams! Promoting and supporting the Physical Red Teaming profession, along with articles, tutorials, and stories about physical security, red teaming, and security risk management.

Red Teams exist to test and improve important systems. Often, but not always, those are cybersecurity or physical security systems. Therefore, you will regularly find cybersecurity red teams or physical security red teams at major companies or government entities that need to protect high-value assets. As the concept of red teaming has grown, new types of red teams have started to emerge, and they operate in new domains – unrelated to the traditional security domain and penetration testing.

Other applications of red teaming include testing and improving Trust & Safety systems (e.g., content moderation of social media sites), AI algorithms, and the red teaming of significant decisions that business, military, and government leaders must make. Remember, red teams exist to test and improve important systems. Those systems may involve company leadership making major decisions about the direction of the company, or AI engineers trying to understand how their algorithms could be misused. No formal taxonomy exists to track the various types of red teams; however, they can be broadly categorized as follows:

-

Critical System Testing (CST) – Testing a System

-

Applied Critical Thinking (ACT) – Challenging How We Think or Decide

Within Critical Systems Testing, there are many different red team types and approaches:

Critical Systems Testing (CST):

-

Security

-

Physical Security

-

Cyber Security

-

Hardware

-

Software/Systems

-

Belonging to Company

-

Belonging to Vendors (i.e., mitigating 3rd-party risk)

-

-

-

Privacy & Information Security

-

-

Algorithms

-

Content Moderation

-

AI Systems

-

Within Applied Critical Thinking, the use of red teams is typically either systemic or ad-hoc. Systemic means that the red teaming is part of an ongoing organizational process (e.g., the intelligence lifecycle) that provides their services on a regular basis. Systemic red teams are often in-house red teams made up of company employees dedicated to their red team role. Ad-hoc occurs as-needed and is often conducted by third parties, or a subset of current employees who are asked to serve on the ad-hoc red team part-time.

Applied Critical Thinking (ACT): Decision Support

-

Systemic

-

Intelligence Analysis Cycle: As an integrated and regular part of the intelligence lifecycle of a specific unit or type of intelligence.

-

Threshold-Based: All decisions that reach a specific threshold (e.g., acquiring a company, investments of a certain size, targeted assassinations, military action that may prompt retaliation, etc.)

-

-

Ad-Hoc

-

A major event leads a decision-maker to request a red team assessment.

-

An existential decision for a company or government leads decision-makers to consider every angle and perspective prior to deciding their next step.

-

-

-

Excerpt from The Newsroom (on HBO) where the news team conducts three separate red team reviews against a story that has the opportunity to cause irreparable harm to the network, anchors, and U.S. Military if it is found to be false.

-

At their peak, large companies can have five or more red teams in place helping to improve their security, services, and decisions. At any given time, you may find any of the below teams at major technology companies helping to secure systems, safeguard algorithms, and improve decision making:

-

Privacy: Testing specific controls, systems, processes, and safeguards that keep private user data private.

-

AI: Looking for ways to circumvent AI-controlled content moderation, or to poison AI models so they produce results that advantage a specific entity or disadvantage your competition or enemy.

-

Threat Modeling: Meeting with company policymakers and product managers to stress-test key decisions, and create models on how products, decisions, and policies can be misused, circumvented, and abused – so those teams can proactively address issues before they arise.

-

Hardware: Testing key technology hardware used by employees, at data centers, or that are otherwise essential to support company operations. This may include internally produced hardware, or vendor hardware that the company relies on.

-

Technology: Standard cybersecurity red team that tests technology, software, and other logical systems that power the company and its platforms.

-

Physical: Testing physical security systems that protect employees, customers, data centers, fiberoptic cables, infrastructure, and more.

Should these teams work together?

Red Teams should seek to work together – but they should not force the collaboration, and they should not be forced to work together. Framed in a different way: a company’s adversaries do not have the same silos and teams (AI, hardware, privacy, physical, etc.) that the company does. An adversary will be a robust and often well-trained and funded group that seeks to carry out a specific agenda, often crossing multiple boundaries and affecting multiple domains. If an adversary will cross boundaries to achieve their objectives, red teams should collaborate across those same boundaries to emulate and counter them.

This may be as simple as conducting Poison Circle sessions together and brainstorming how an adversary could attack, or as complex as conducting a full-scale multi-pronged simulated attack against the company to test the security controls and accurately emulate an adversary. No matter how it is done, it is important that these teams work together on a regular basis and keep each other apprised of their operations.

Cross-Team Leadership

Regardless of their domain, Red Teams will frequently run into the same issues. This may include determining how to prioritize red team operations, having trouble convincing security teams to fix vulnerabilities, or uncertainty on how to prioritize findings. I have learned some of my most important red team lessons from mentors of other red teams (tip: learn from other’s mistakes as often as possible – it hurts less, and you can learn just as much). Regular meetings to understand budgets, focuses, priorities, and more will help red team leaders support their teams better, and show better results to company leadership. An added bonus if you can benchmark with industry peers – outside of your company – and learn about their processes, wins, and pain points.

How can red teams work together?

There are endless ways various teams can collaborate. Here is a quick list of ten ways that I have seen various types of red teams within one company collaborate to show great results:

-

Embedded Red Teamer: A cybersecurity red teamer joins the physical security red team for an operation, or vice versa. The key is to fully integrate a member of another red team through the entirety of an operation.

-

Poison Circle: Two or more red team gets together to ideate and brainstorm different tactics, objectives, and operations they could perform to emulate an adversary. In other words, a group of devious rule-breakers sit in a room and think about creative bad things they could do to the company in the name of the greater good, of course.

-

Attend Debriefings: The debriefing and reports following a red team assessment are the most essential step to ensuring the team’s findings are understood, taken seriously, and addressed. Seeing how other red teams present their findings is essential to improving your own approach.

-

Joint Trainings: Inviting another red team to attend a training that your red team is hosting, or collaborating on a training that applies to both teams – both options create bonds and shared knowledge between teams.

-

Conferences: Whether just attending, or putting on a joint presentation, the opportunity to bond, collaborate, and share ideas in an exciting setting like a conference will provide huge benefits to the teams.

-

Prioritization: Red teams should prioritize based on real-world threats to the company. Each team should therefore have related priorities. This presents an excellent time to work together and collaborate on which operations each team plans to conduct for the year, and how to ensure all important operations have the proper staffing and resources to be completed.

-

Happy Hours: This one is simple! Someone needs to plan and host a happy hour for red teams. Attendees have a major part of their professional and often personal life in common with each other at a red team happy hour, creating a great foundation for ice breaking and conversations.

-

Shared Interest Events: Many red teamers love lockpicking – no matter what type of red teaming they pursued. Others may have an affinity for threat ideation or desire to debate the best techniques from the Army’s Red Teaming Handbook (yes, I’m serious, I know plenty of these folks and they are awesome). No matter what the shared interest, there is always an opportunity to host events and invite the broader community.

-

Recruiting: Red teaming is exciting. Sending members from several red teams to a recruiting event is a sure-fire way to get the attention of smart and creative candidates who are interested in having fun while contributing to the safety, security, and better decisions of a company.

-

Group Chats: This one seems obvious, but it takes effort. To establish the foundation of collaboration and likeness between teams, it is highly beneficial to have a group chat, or slack, or other forum for sharing thoughts and articles. There are highly relevant and interesting articles in the news on a regular basis. Having several active participants sending and discussing recent industry news (e.g., heists for physical security teams, breaches or exploits for cybersecurity red teams, etc.) will foster connection between the teams.

There are many more ways that various red teams can work together despite their different focuses and skillsets. It’s incumbent upon those teams’ leaders (formal or informal) to foster those connections and create opportunities to learn from sister red teams.

Other Perspectives on Red Teaming

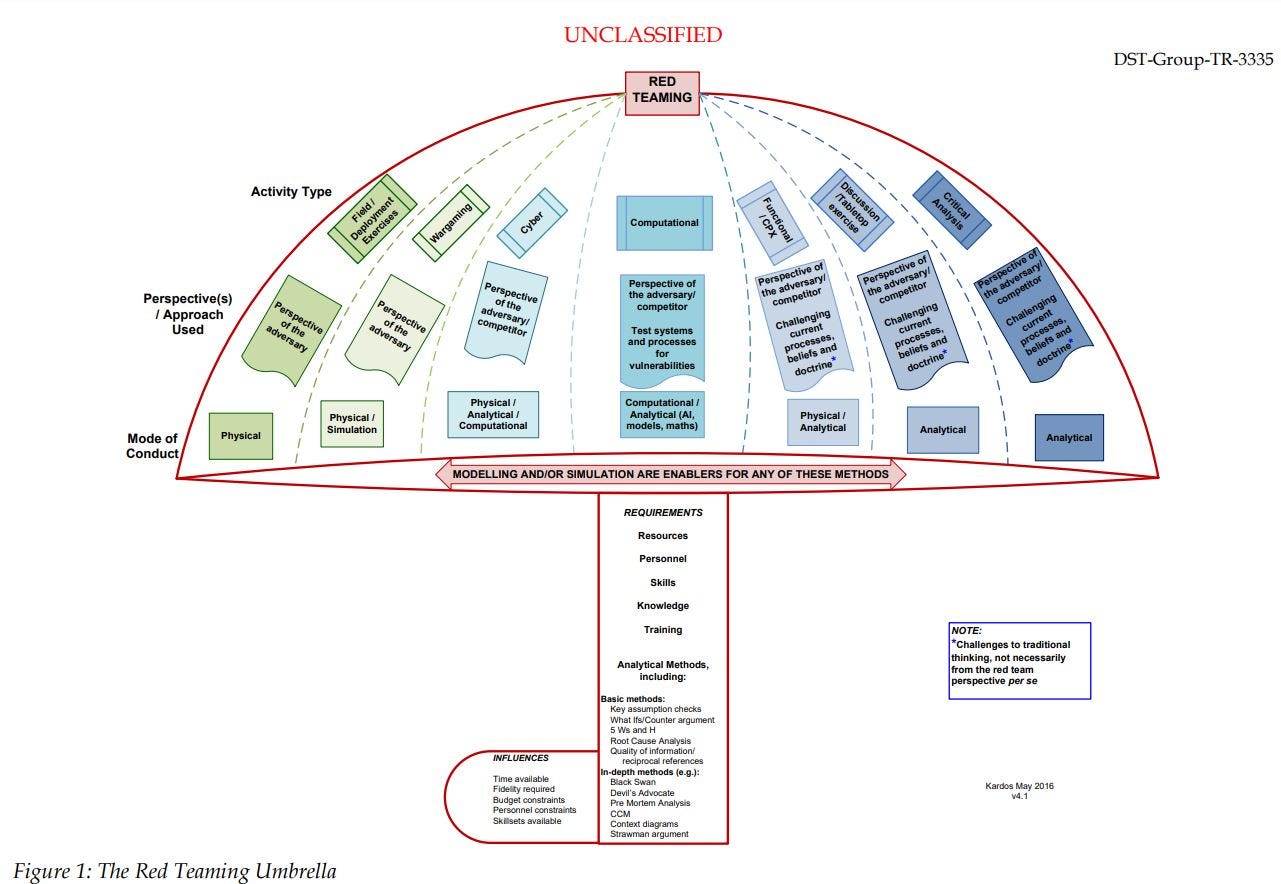

Australia’s Department of Defense has a Defense Science and Technology Group who conducted a deep-dive into the various types of Red Teams in 2017 – their report can be viewed online [PDF]. They created one of the only infographics that cover the various types and approaches to red teaming: The Red Teaming Umbrella.

This article covered a lot of information about dynamics and attempted to create some structure in a constantly shifting environment of corporate red teams. A few key takeaways include:

-

Red Teams exist to test and improve important systems.

-

Red Teaming can generally be split between Critical Systems Testing (CST) and Applied Critical Thinking (ACT).

-

Companies and Governments will often have multiple red teams.

-

There are more types of red teams than just physical security and cybersecurity teams.

-

Red teams should work together regularly. The extent and ways that they work together should be determined by the teams.

-

There is generally a lack of literature on the various types of red teams that exist in government and the private sector.